News Story

Shamma, Horiuchi co-PIs on NSF cortical architectures grant

UMIACS Associate Research Scientist Cornelia Fermüller is the principal investigator for a three-year, $750K National Science Foundation grant, “ Cortical Architectures for Robust Adaptive Perception and Action.” Co-PIs include Professor Shihab Shamma (ECE/ISR); Associate Professor Timothy Horiuchi (ECE/ISR); and Johns Hopkins University Professors Andreas Andreou and Ralph Etienne-Cummings.

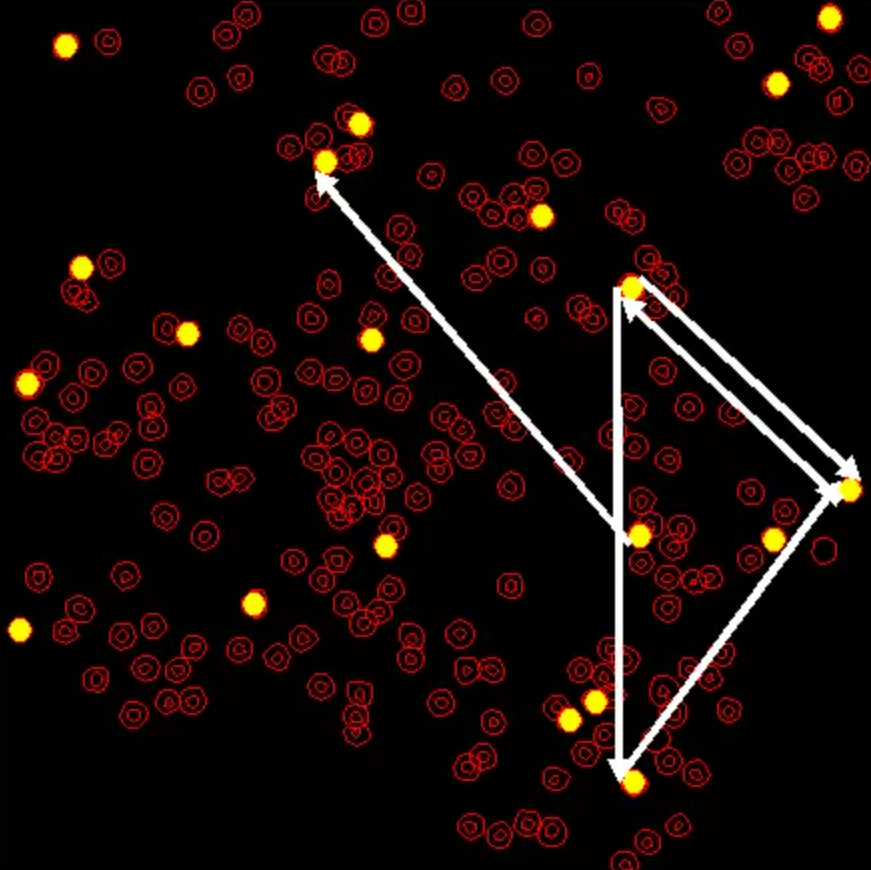

The motivation for this biologically-inspired approach is to design systems that perceive and act in cluttered and noisy scenes that they have never experienced. This stands in contrast with the state of the art in computational engineering systems that need to be re-trained each time they confront an unanticipated environment. The main reason is that current approaches to perception address specific problems in isolation and do not consider that the primary role of perception is to support systems with bodies in action. As a result, they are constrained to the situations for which they were trained and cannot react to changing tasks and scenes.

By focusing on cognition primitives rather than specific applications, the work is expected to greatly advance the state of the art of machine perception and lead to the development of systems that can robustly and on-line adapt to new environments, react to novel situations and learn new contexts. To do so, novel theoretical formulations of perception and action and high-speed, low-power, hardware implementations with on-line learning capabilities will be studied while assimilating new insights from the neurosciences. Consequently, this work will network neuroscience, cognitive science, applied mathematics, computer science and engineering so as to lower one of the few remaining barriers that keeps interactive robots in the realm of science fiction.

Beyond the scholarly contribution, the work is expected to provide know-how for the design of systems with adaptive perception in a modular fashion with reusable components. Such systems have applications in computational vision and auditory perception problems and can advance the industry of cognitive biologically-inspired robotics and assistive devices.

Just about any task which an intelligent system solves involves the interplay of four basic processes that are devoted to: (a) context, (b) attention, (c) segmentation and (d) categorization. The members of the proposed network will study these canonical cognitive primitives by combining neural modeling with neural and behavioral experiments, theoretical and computational modeling and implementation in robotics. The findings of theoretical insights will then be adapted to satisfy the demands of realistic behavior, and to develop technological solutions for applications of robust and invariant perception and action.

Published September 15, 2015